VISUOSPATIAL NAVIGATION: AN INVESTIGATION INTO THE ROLE OF LOW LEVEL NAVIGATION CUES

Abstract

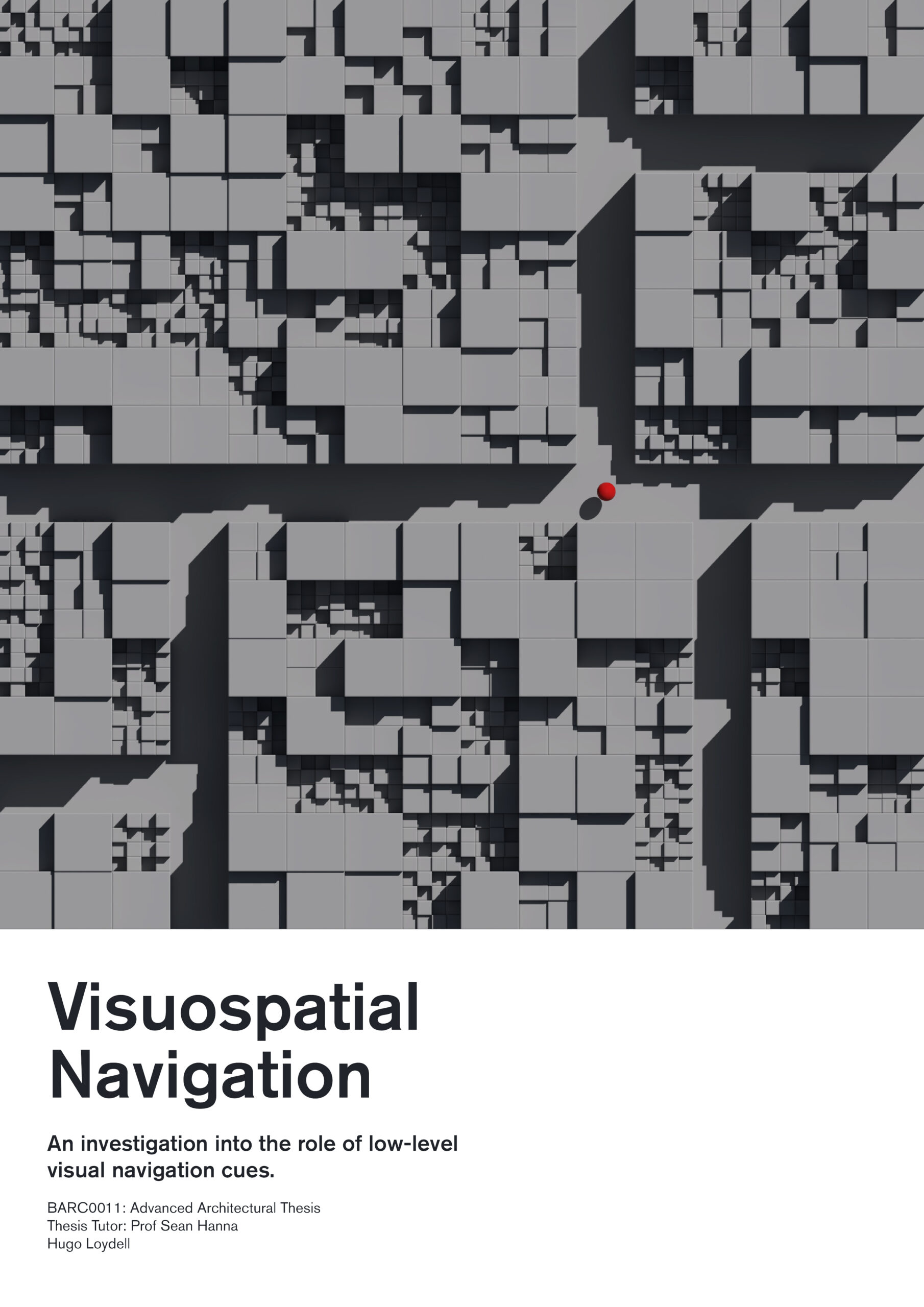

In this paper, I explore the role of low-level visual processing in navigation through a series of controlled, participant-based experiments. Utilising eye-tracking to explore the significance of the collected navigational data and the relationship between the lower-level visual processes and higher-level cognition. The intention is to challenge current navigation models, such as those adopted by Space Syntax. These models take primary influence from the research of James Gibson, who framed perception through environmental affordances and invariants, focusing on the physical implementation of vision, rather than the fundamental processes which drive it. Thus, often overlooking the complexity of visual phenomena and the role of low-level visual processes in navigation. Through this thesis, I intend to further our understanding of navigation by extending our architectural understanding of spatial perception. Theorising that by investigating approaches which further consider the role of low-level neural processes in perception, such as those explored by David Marr, and incorporating these within the more holistic and contextual-driven views of James Gibson currently adopted in the architectural discourse, we can design better spaces for navigation.

Current views held by Space Syntax when concerning navigation through space prioritise depth-cues in their effect upon navigational behaviour. As such, it was hypothesised that factors such as light and visual complexity, not solely depth-cues, are responsible for affecting how we navigate space. This research interrogated these concepts by considering the role of visual complexity, light intensity, and depth. An experimental setup within a 3D virtual environment tested for effects of these parameters, while tracking the eye’s movement using an eye-tracking headset and found participant's decisions to be impacted by these low-level visual cues. With the visual saliency of these cues affects choices at a pre-attentive level. Suggesting that both architects and navigational Syntax models could benefit from the consideration of these low-level features in their analysis.